GPT-5 is an incredibly capable model. On our internal extraction benchmarks, it consistently sets a high bar for multimodal reasoning and structured output generation.

We’re often asked: When should we use GPT-5 directly for parsing documents versus building on a more specialized pipeline?

If you:

- have simple, uniform document types,

- don’t need 99%+ accuracy,

- aren’t latency-sensitive, and

- don’t need platform tooling around your processing (e.g. bounding boxes)

Using GPT-5 directly might be the simplest and most efficient path. You’ll avoid added complexity and third-party dependencies.

However, a large number of use cases don’t fall into these categories. For anyone building document ingestion pipelines in high-stakes verticals (e.g. healthcare, financial services, insurance, real estate) where documents can be messy, going direct to an LLM often leads to subtle issues.

Below, we share several real-world examples where GPT-5’s raw capabilities weren’t enough. These cases reveal common patterns about where general-purpose VLMs still fall short and where the opportunity for improvement lies.

Methodology

For all tests, we followed a consistent setup:

- Full document input to GPT-5 via API Playground

- High-reasoning mode enabled

- OmniAI Zerox prompts for HTML/Markdown parsing

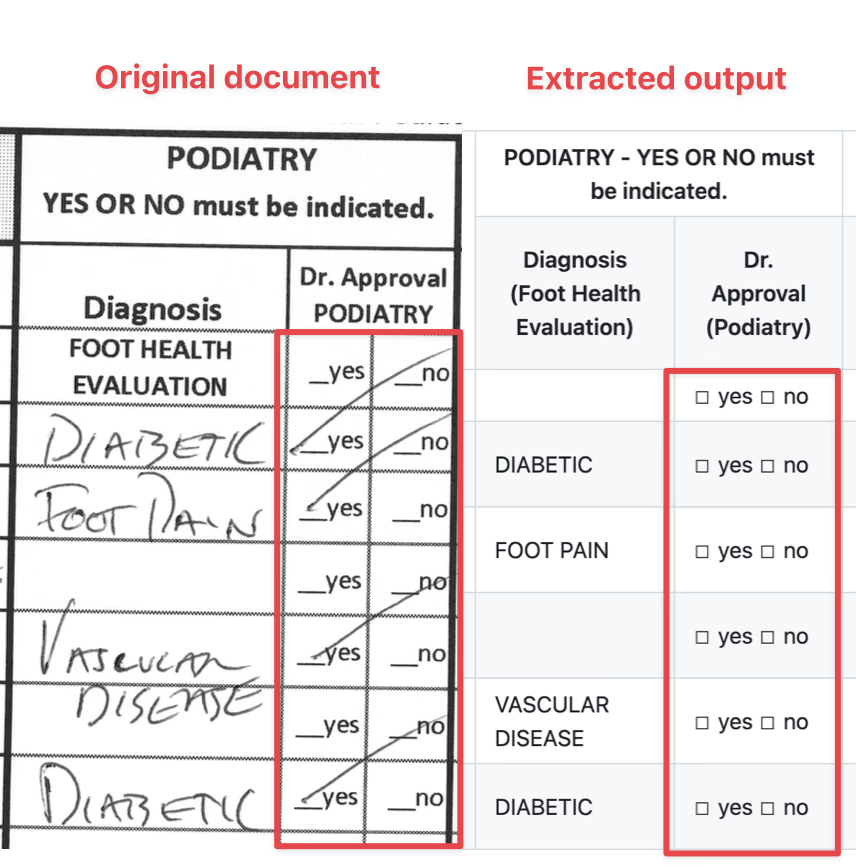

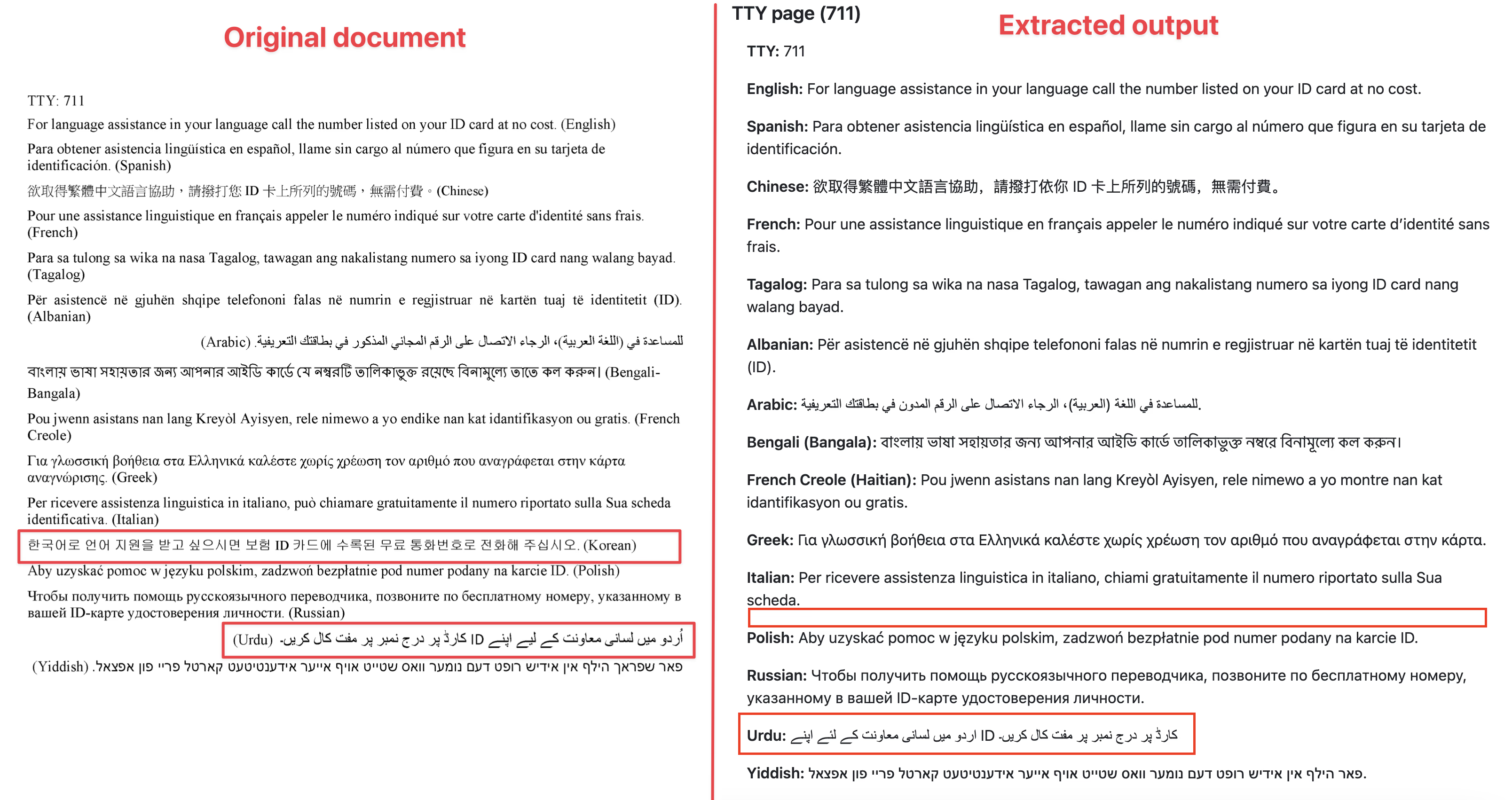

Checkboxes

Checkboxes are simple for humans, but surprisingly one of the hardest edge cases for VLMs.

GPT-5 failed to extract the checkmarks inside boxes, even under high-effort settings. Latency also spiked, taking over two minutes for a single page.

Checkboxes often appear in varied non-standard formats. Traditional OCR handles simple cases better, but struggles on the long tail of messy edge cases. Multimodal LLMs are more generalized, but not reliable enough for production use cases like these.

For use cases demanding high accuracy where a single missed checkbox can have downstream impact (e.g. healthcare orders or claims), hybrid OCR + fine-tuned VLM pipelines achieve better performance.

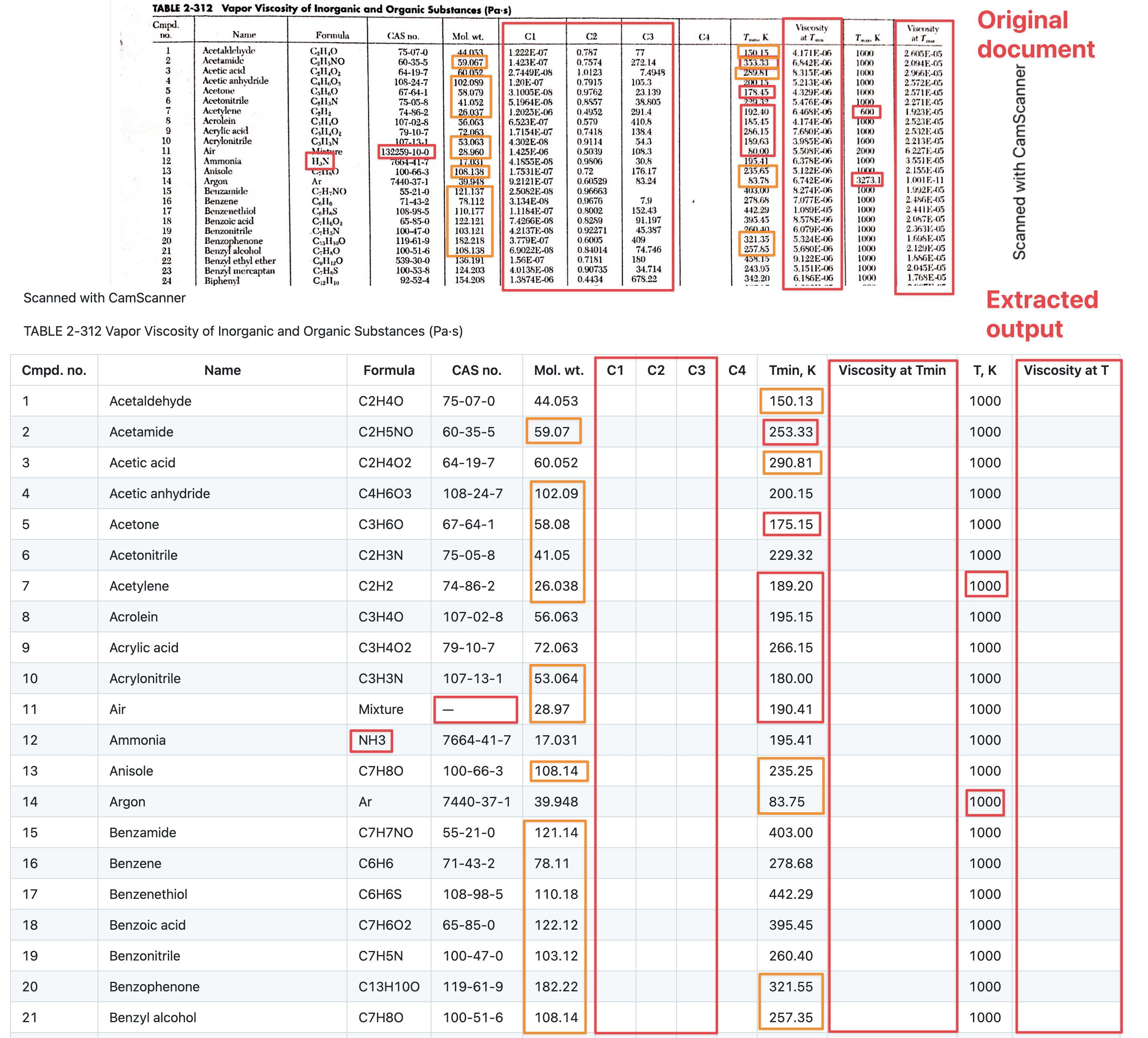

Low Resolution Hallucinations

In the real world, documents are visually messy: low-resolution, poorly scanned, or rotated and skewed.

GPT-5 dropped entire columns of data due to resolution limits, which is a common issue with poorly scanned documents. The latency was also very high (7m21s).

In addition, we detect a number of hallucinations within the document when the model made rounding errors (highlighted in orange) on precise numbers.

LLMs are designed to complete the most probable token and thus make logical leaps like these. This is very different from the job of parsing documents, where we aim to return a pixel perfect, ingestion-ready representation of the document.

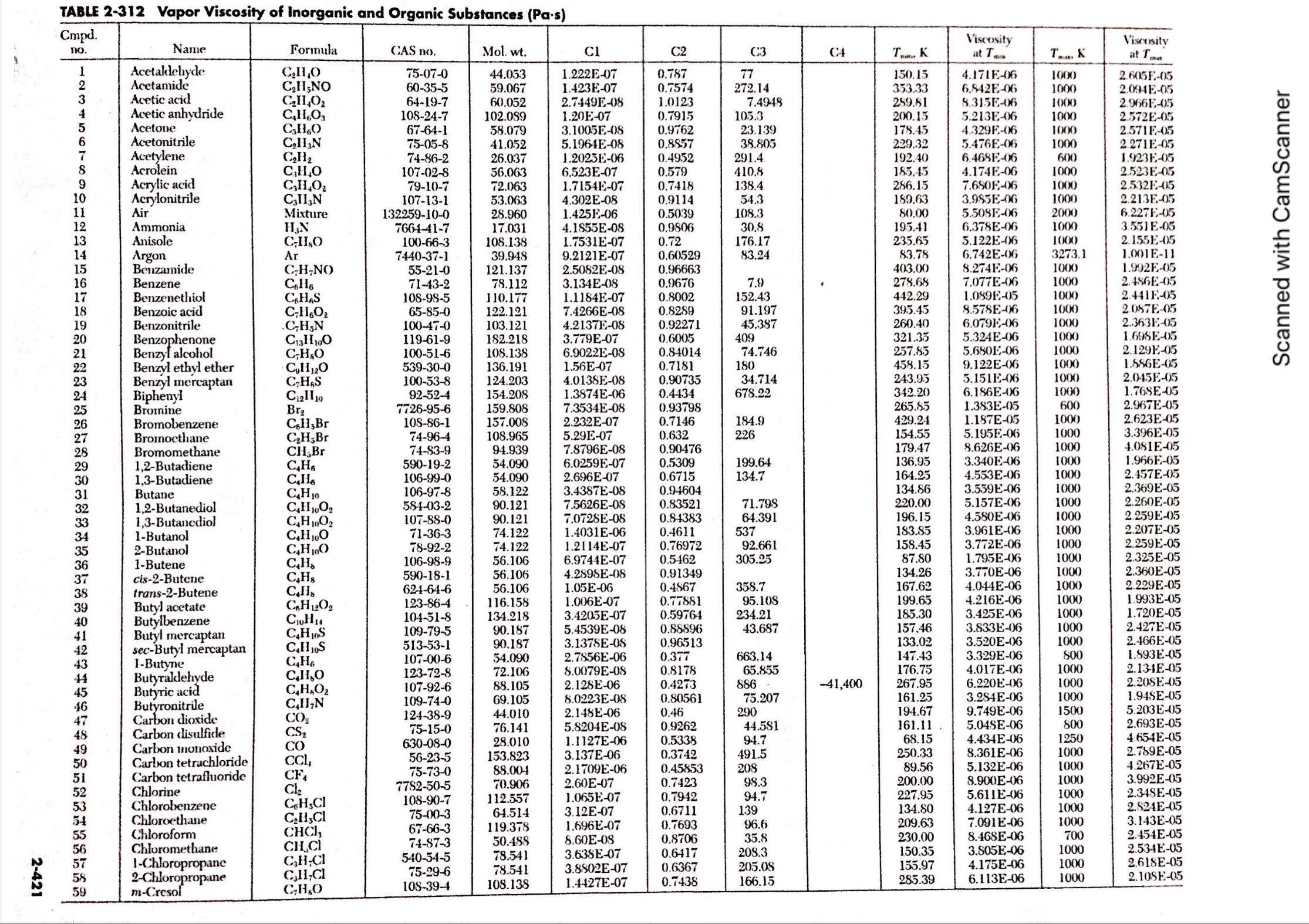

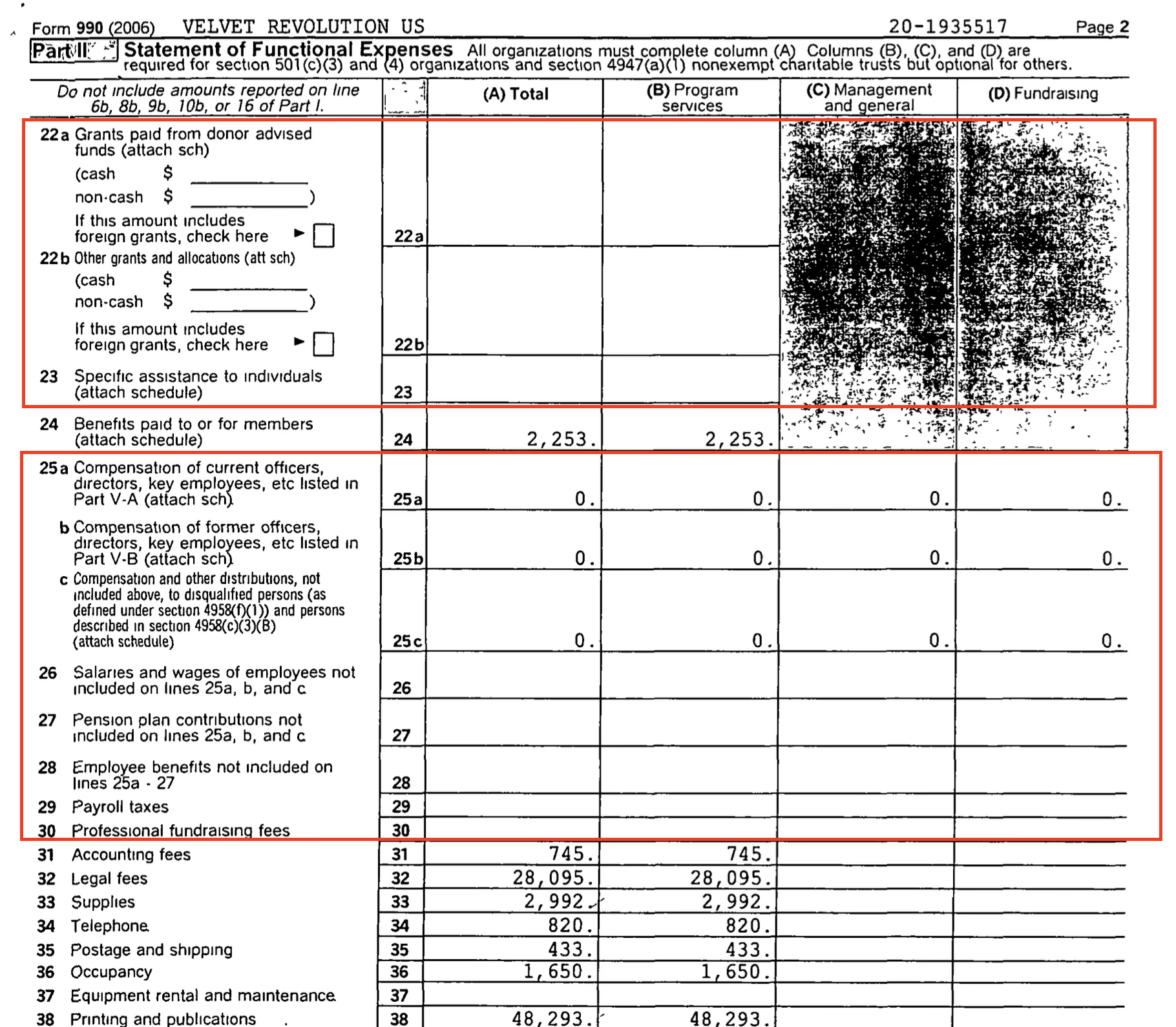

Long Arrays

Lengthy arrays are still an unsolved challenge for all solutions, and GPT-5 is no exception. We provided this 11 page document to GPT-5 to see if it could reliably extract a table that spanned several pages.

While parsing this lengthy table, GPT-5 skipped several rows and fields. A section of the original document is below, with missing portions indicated in red:

GPT-5 Rendered HTML

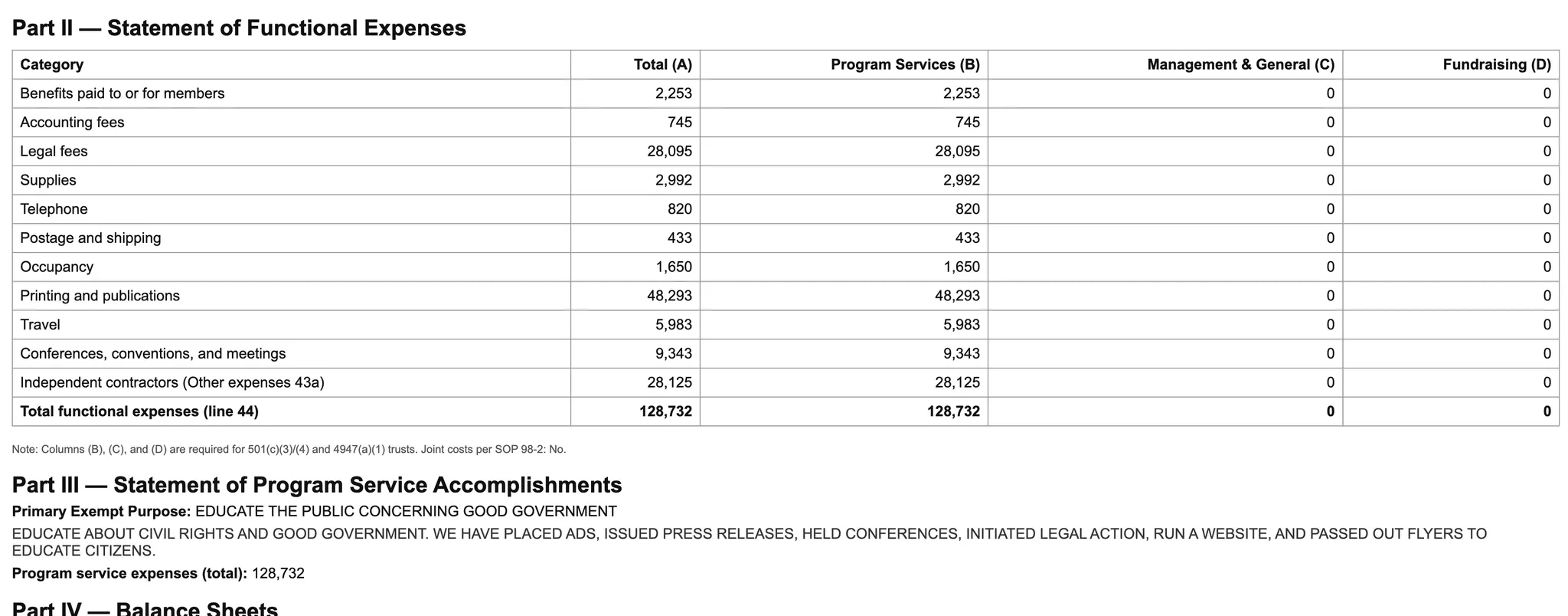

Multi-language Support

Multilingual documents add another layer of complexity.

We gave this multi-language document to GPT-5 and while it nailed the simpler and cleaner data on the first pages, it had several multiple misses in the alternate languages section.

It performed very well at Latin-script alphabet languages and more popular non-English languages such as Greek, Russian, and Chinese, but struggled with the less-common language Urdu, and completely dropped the Korean translation.

What this means for engineering teams:

Frontier models like GPT-5 are major leaps in general reasoning. But the task of document processing requires specificity, not generality.

In our tests (which can be easily replicated above):

- checkboxes went undetected,

- low-resolution scans were partially dropped,

- long tables lost data integrity, and

- multilingual content wasn’t consistently captured.

For simple, uniform documents, GPT-5 can be cost-effective and elegant. But at scale in the real world, these edge cases compound fast.

LLMs are incredible reasoning engines, but document parsing is a precision task. If your ingestion stage is lossy or wrong, every downstream step inherits that error. That’s why we (and many of our customers) treat GPT-5 as one component in a broader architecture: pairing OCR engines for text fidelity, VLMs for structural understanding, and LLMs for post-processing and review.