8 MIN READ

Dec 11, 2025

Blog Post

Why AI-Powered Unstructured Data Processing Is Important in December 2025

Kushal Byatnal

Co-founder, CEO

Organizations waste countless hours managing documents that could be handled automatically. With a lot of business data trapped in unstructured formats, legacy OCR systems fall short, breaking on complex layouts, handwriting, and real-world variability. Fortunately, new advances in AI for unstructured data are changing that, delivering production-level accuracy and adaptability that make true automation possible. Learn how next-generation document intelligence is turning document processing from a costly bottleneck into a competitive advantage.

TLDR:

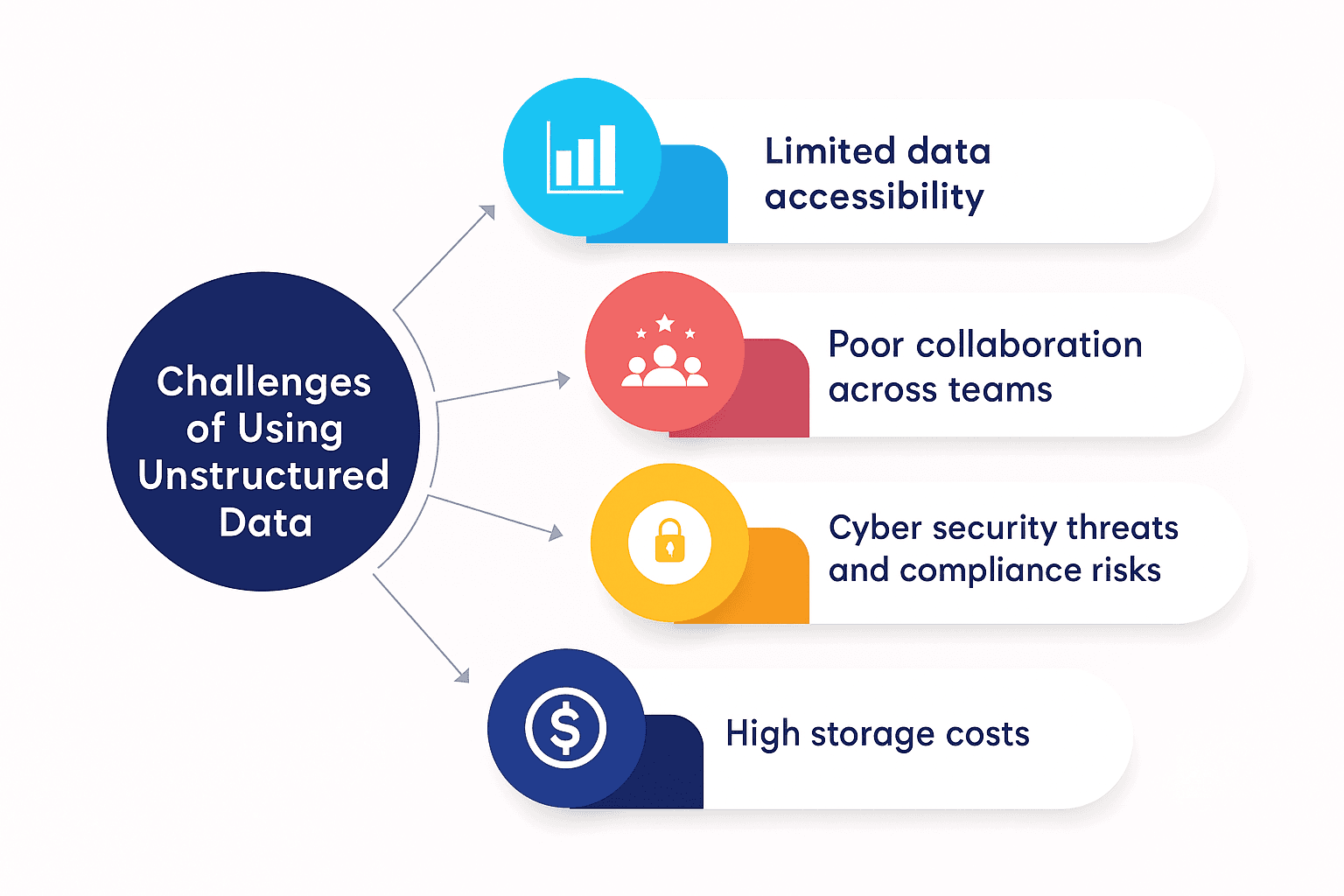

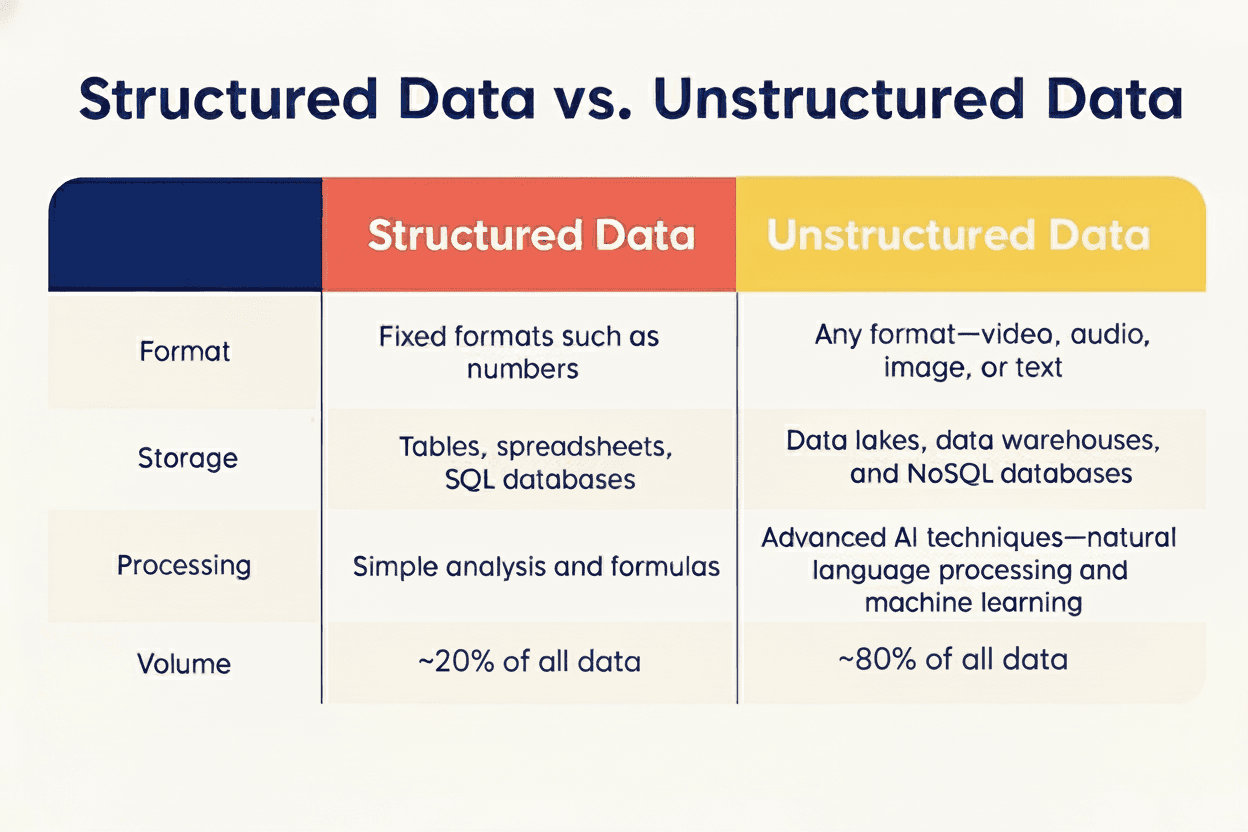

Over 80% of business data is unstructured and growing exponentially, creating massive competitive gaps between companies that can process it effectively and those stuck with manual workflows

Traditional OCR achieves only 80% accuracy and breaks on complex layouts, handwriting, and document variations, making it unsuitable for mission-critical business processes

LLMs understand document context and meaning beyond just text recognition, allowing 99%+ accuracy through semantic chunking and cross-field validation

Custom model fine-tuning for domain-specific documents delivers disruptive results, with healthcare organizations seeing accuracy improvements and financial services reducing processing time

Some production-ready document AI systems support feedback loops and continuous learning, reducing deployment times from months to weeks

The Scale of Unstructured Data Challenge in 2025

The numbers tell a stark story. More than 80% of business data exists in unstructured formats, and large organizations generally produce about four times as much unstructured data as structured data.

The traditional approach of manual data entry or basic OCR tools simply can't scale with this volume.

Organizations that can unlock value from unstructured data gain competitive advantages in speed, accuracy, and performance that compound over time.

The teams still doing this manually are burning resources on repetitive tasks while missing opportunities for real-time insights.

The gap between data-rich and data-poor organizations is widening rapidly. Companies that master AI data extraction can automate workflows that previously required armies of data entry clerks, freeing up human resources for higher-value work.

Why Traditional OCR Falls Short for Complex Documents

Traditional OCR was built for a simpler world. These legacy systems excel at reading clean, typed text from standardized forms but crumble when faced with the messy reality of business documents.

The core problem is rigidity. Traditional OCR relies on template matching and rule-based extraction that requires extensive manual configuration for each document type. When you encounter a new invoice format or a slightly rotated scan, the entire system needs recalibration.

Legacy OCR typically achieves around 80% accuracy, meaning one in five extracted data points requires manual correction: hardly the automation businesses need.

The maintenance burden becomes overwhelming. Each new document variation requires engineering time to write new rules and templates. Companies end up with brittle systems that break whenever document formats change.

This is why revolutionizing document processing requires moving beyond these legacy approaches. AI-powered solutions understand context and meaning instead of just pattern matching, delivering the accuracy and flexibility that modern businesses demand.

How LLMs Change Document Understanding

The breakthrough goes beyond better text recognition: it's genuine comprehension. LLMs can capture contextual information from documents and make sense of it just like a human would, understanding relationships between data points instead of treating each field in isolation.

Traditional systems read documents. LLMs understand them.

LLMs built from the ground up for document processing handle complex documents far beyond traditional OCR abilities, parsing tables, signatures, handwriting, and images while maintaining exceptional accuracy.

The technical foundation relies on sophisticated context engineering. Instead of feeding raw scanned text to models, advanced systems clean and prep documents so important information is preserved. This includes preserving layout and table relationships.

Modern LLMs also excel at semantic chunking: breaking long documents into meaningful sections without losing context. A contract gets segmented by clauses instead of arbitrary page breaks, so each section retains the necessary context for accurate interpretation.

This understanding allows features like citations and reasoning, where the system can explain why it extracted specific values and point to source locations. The result is PDF-to-JSON conversion that preserves both text and layout context.

Business Applications Driving Adoption

The real test of AI document processing isn't in labs; it's in the trenches where businesses handle thousands of documents daily. Financial institutions deal with numerous unstructured documents like invoices, contracts, and tax returns that create massive processing bottlenecks.

Consider mortgage underwriting. Loan officers traditionally spend hours manually reviewing bank statements, pay stubs, and tax documents. AI systems can extract and validate this data in minutes, cross-referencing income figures across multiple documents to flag inconsistencies automatically.

Healthcare organizations face similar challenges with prior authorization intake and insurance claims processing. Medical records parsing that once required specialized staff can now be automated, freeing clinicians to focus on patient care instead of paperwork.

AI-powered document processing tools can automate the extraction of critical data points from these documents, greatly reducing manual effort while improving accuracy.

Logistics companies processing bills of lading see immediate ROI from automated data capture. Instead of manually entering shipping details, customs information, and freight charges, AI systems extract this data in real-time as documents arrive.

The pattern repeats across industries: wherever document-heavy workflows create bottlenecks, AI processing delivers measurable improvements in speed, accuracy, and resource allocation.

The Accuracy Imperative for Mission-Critical Workflows

These aren't minor inconveniences: they're business-critical failures that compound into disasters.

Modern AI systems change this calculus entirely. While global fast-food franchises achieve 82 percent accuracy across complex fields, leading solutions consistently exceed 99% extraction accuracy, a threshold that makes full automation viable for mission-critical workflows.

Built-in validation, confidence scoring, and cross-field checks maintain data quality by flagging low-confidence or inconsistent outputs before use downstream.

Configuration and evaluation tools let teams benchmark performance against labeled datasets, measuring extraction accuracy for each field and tracking precision metrics over time. This quantitative approach builds confidence for production deployments.

Human-in-the-loop workflows provide the final accuracy guarantee. Domain experts can validate edge cases while the system learns from every correction, continuously improving performance on organization-specific documents.

The result changes document processing from a liability into a competitive advantage, letting you automate workflows that previously required extensive manual oversight.

Custom Model Fine-Tuning for Domain Expertise

Generic models understand language, but they don't understand your business. The difference between a general-purpose LLM and one fine-tuned for your specific documents can be disruptive. We're talking about accuracy improvements that make automation viable where it previously failed.

Domain-specific fine-tuning teaches models the nuances of your industry's terminology, document structures, and data relationships. A healthcare model learns that "CBC" refers to complete blood count, while a logistics model understands freight classifications and shipping codes that would confuse general models.

Fine-tuned models process documents faster and understand the business context behind the data, allowing more sophisticated validation and extraction logic.

The technical approach involves training models on your document corpus, teaching them to recognize patterns unique to your workflows. This includes understanding how your invoices are structured, what fields matter most, and how different document sections relate to each other.

The business case is clear: custom models change document processing from a necessary evil into a competitive advantage, delivering accuracy levels that allow full automation of previously manual workflows.

How Extend Delivers Production-Ready Document AI

The gap between AI demos and production systems is where most document processing projects fail.

The technical foundation starts with complete document understanding. While competitors bolt AI onto legacy OCR engines, Extend's LLM-powered architecture handles complex documents far beyond traditional OCR tools: parsing tables, signatures, handwriting, and degraded scans while maintaining exceptional accuracy.

The memory system sets Extend apart from static solutions. The system learns from the visual layout of past documents to improve accuracy on similar files over time, turning every processed document into training data that improves future performance. This continuous learning means accuracy improves automatically as you process more documents.

Customers report bake-off evaluations against numerous vendors with positive results, eliminating whole classes of accuracy problems that plagued other solutions.

Real deployment speed shows the production-ready difference. Customers report cutting development time from months to weeks and improving downstream business metrics by automating document workflows that previously required manual processing.

This complete approach explains why customers consistently choose Extend over alternatives: they surround the best models, with the best context, and the best tooling that actually works in production environments where accuracy and reliability matter most.

FAQs

What accuracy levels can I expect from AI-powered document processing?

Modern AI systems consistently exceed 99% extraction accuracy, compared to the 80% typical of legacy OCR solutions. This accuracy improvement makes full automation viable for mission-critical workflows where errors have serious business consequences.

How long does it take to deploy AI document processing in production?

Most teams can get a prototype running in minutes to hours, with production-grade accuracy achieved in days instead of the traditional months-long AI projects. Some customers report cutting development time from six months to just days.

What's the main difference between AI-powered and traditional OCR systems?

Traditional OCR reads documents using template matching and rules, while AI-powered systems understand context and meaning like humans do. This allows AI to handle complex layouts, handwriting, and document variations that break legacy systems.

When should I consider switching from manual document processing?

If your team is processing hundreds to thousands of documents daily with manual data entry, experiencing bottlenecks in document-heavy workflows, or seeing error rates that impact business decisions, AI automation can deliver immediate ROI through faster processing and higher accuracy.

Final thoughts on AI-powered document processing transformation

The shift from manual document processing to AI automation is no longer optional; it’s the key to staying competitive as unstructured data continues to surge. Organizations that welcome intelligent automation now are unlocking faster workflows, higher accuracy, and more time for strategic changes, while others fall behind. Find out how production-ready document AI from Extend can turn bottlenecks into lasting competitive advantages, and how quickly your team can make it happen.

WHY EXTEND?