Tired of rewriting prompts every time your business rules shift? That constant maintenance is why enterprises are embracing context engineering, a smarter foundation where knowledge, validation rules, and operational logic live in one persistent layer that AI systems access dynamically. This evolution from fragile prompts to adaptive context became undeniable, with tools empowering teams to build context-aware document processing pipelines that scale effortlessly.

TLDR:

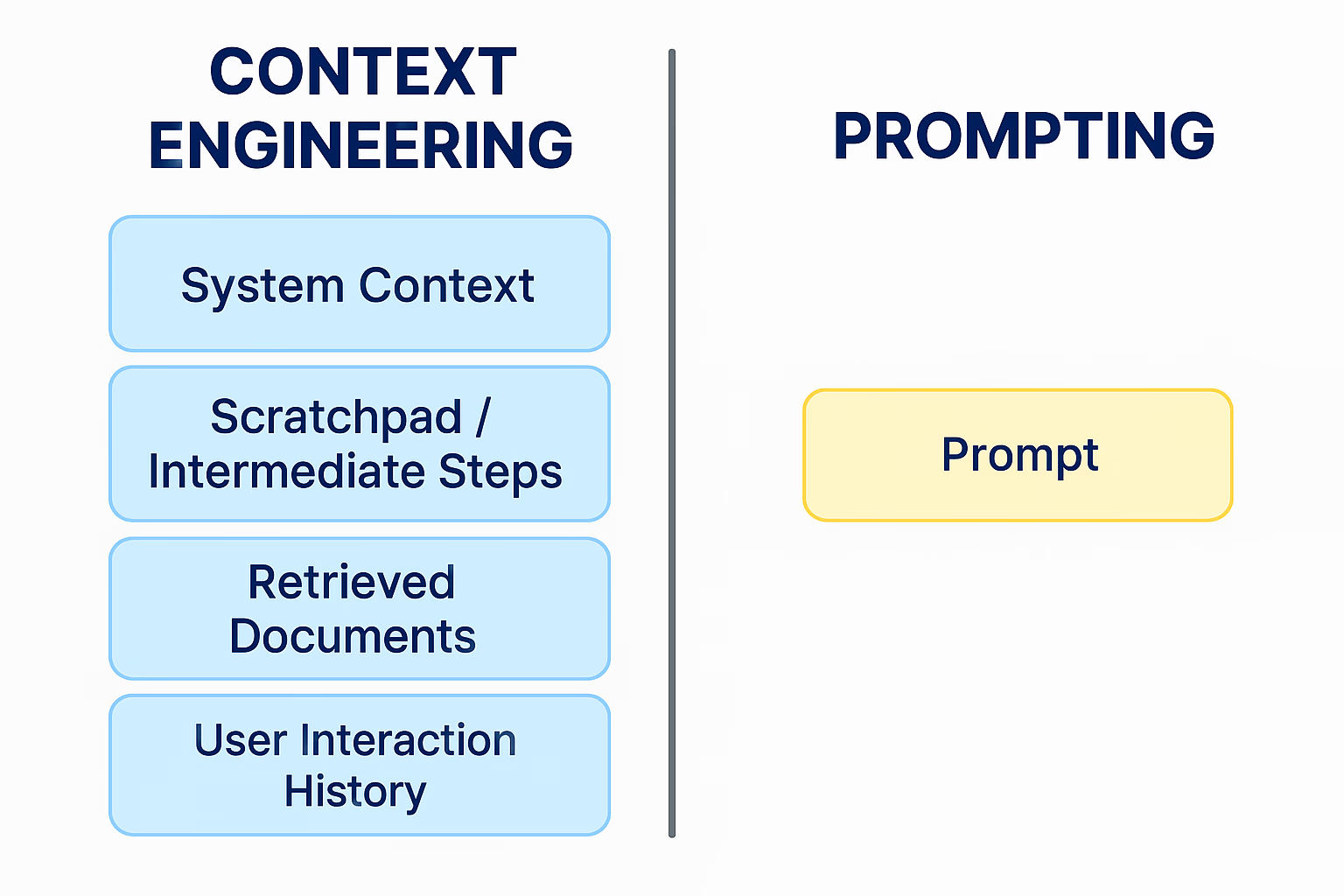

- Context engineering structures persistent business rules and operational state that AI systems access across interactions, while prompt engineering writes isolated instructions for each query

- Context editing delivers 10.6% better performance than model fine-tuning with 86.9% lower latency

- Agentic AI systems require shared context to maintain situational awareness across autonomous decisions like invoice approvals and document routing

- Document processing exposes prompt engineering's limits because validation requires checking against purchase orders, vendor contracts, and historical patterns

- Complete document processing toolkits comprised of the most accurate parsing, extraction, and splitting APIs ship your hardest use cases in minutes, not months

Context Engineering vs Prompt Engineering: Understanding the Shift

Context engineering structures the environment where AI operates. Prompt engineering refines the instructions you give. The first builds the foundation, the second polishes the request.

Input Architecture

Prompt engineering treats each interaction as isolated. You write instructions, the model responds, and the next query starts fresh. Context engineering loads persistent state: your company's document schemas, approved vendor lists, historical processing decisions, and validation rules that remain active across every interaction.

When processing invoices, prompt engineering asks "Extract line items with their amounts." Context engineering provides the AI with your GL code taxonomy, approved vendor database, historical invoice patterns, and validation thresholds before any extraction begins.

Maintenance Overhead

Prompt libraries become technical debt. When business rules change, teams hunt through prompt repositories, updating instructions across multiple templates. Context engineering separates business logic from prompts, reducing rework when models or rules change.

Output Consistency

Prompt engineering produces variable results. Slight wording changes alter outputs. Different team members write different prompts for identical tasks. The same prompt can yield different results across model versions.

Context engineering enforces structural consistency. When AI systems operate within defined schemas, validation rules, and business constraints, outputs conform to enterprise standards regardless of how queries are phrased.

The Context Engineering Tipping Point

Stanford University, SambaNova Systems, and UC Berkeley released the ACE framework, proving that editing input context outperformed model fine-tuning. The framework delivered 10.6% improvement on agentic tasks, 8.6% gains on financial reasoning, and 86.9% average latency reduction.

The research validated a core principle: context architecture matters more than prompt optimization. Organizations processing millions of documents couldn't scale prompt engineering approaches. They needed systems that self-improved through context evolution, not manual prompt refinement. The research and enterprise pain points converged, establishing context engineering as a distinct discipline with measurable ROI.

The Enterprise Context Crisis: Why Prompt Engineering Failed at Scale

Enterprise document workflows expose prompt engineering's core weakness: no memory between interactions.

Agentic AI systems break down under prompt engineering. Without shared context, agents misalign on priorities, duplicate validation checks, or ignore dependencies. One agent extracts data while another applies outdated rules because neither accesses the same operational state.

Prompt engineering lacks persistent memory. Each interaction starts from zero. You can embed context into prompts, but token limits constrain how much business knowledge fits. Critical information gets truncated.

Context engineering fixes this through managed state. Vendor databases, validation rules, and processing history stay accessible across every agent interaction. When business rules change, all agents operate from updated context without rewriting prompts.

Agentic AI Systems Drive Context Engineering Adoption

AI agents execute actions: approving vendor payments, routing documents to departments, updating accounting systems, triggering compliance reviews. Each decision requires understanding current state, business rules, approval hierarchies, and downstream consequences.

Context Engineering Framework: The Four Pillars of Implementation

Organizations implementing context engineering need four foundational components working together.

Structured Knowledge Repositories

Business rules, document schemas, and validation logic must live in queryable formats. Converting approval workflows, vendor databases, and compliance requirements into structured data allows AI systems to access information dynamically rather than encoding business knowledge into brittle prompts.

State Management Infrastructure

AI agents require persistent memory across interactions to track processing history, flag exceptions, and maintain operational state. When one agent extracts invoice data, another agent should access that context when applying validation rules.

Dynamic Context Injection

Pipelines that load relevant context based on task requirements make context situational rather than static. Processing a vendor invoice should automatically retrieve that vendor's history, contract terms, and approval thresholds.

Feedback Loops

Capturing corrections, exceptions, and edge cases refines context over time. When humans override agent decisions or flag errors, feeding that signal back into knowledge repositories allows the context architecture to improve from operational data, reducing manual intervention with each iteration.

Document Processing: Context Engineering's First Killer Application

Document processing exposes the limits of prompt engineering because documents require understanding beyond text extraction. An invoice contains numbers and dates, but processing it means validating against purchase orders, vendor contracts, budget approvals, and historical patterns.

Prompt engineering extracts the total amount. Context engineering validates the invoice against the PO, confirms vendor approval, checks line items against contract rates, and compares amounts to historical spend patterns. That situational awareness separates functional extraction from production-ready automation.

Organizations need validation against operational context, routing based on approval hierarchies, and exception handling informed by processing history, not just data pulled from PDFs.

Context Engineering Challenges: Poisoning, Collapse, and Complexity

Context poisoning occurs when incorrect data corrupts your knowledge repositories. A single mislabeled document or incorrect validation rule propagates through every subsequent interaction. Unlike prompt errors that affect one query, poisoned context damages every workflow that touches contaminated knowledge.

Context collapse happens when AI systems receive too much information. Loading every vendor record, all historical invoices, and complete approval hierarchies into each interaction overwhelms models with irrelevant data. Performance degrades as signal drowns in noise.

Managing context complexity requires careful architecture. Organizations need governance for who updates knowledge repositories, version control for context changes, and monitoring to detect when context quality degrades. Scratchpad methods can deliver up to 54% improvement in specialized agent benchmarks by preventing internal contradictions from disrupting reasoning chains.

Validating context freshness adds operational overhead. Business rules change, vendors get added or removed, and compliance requirements evolve. Stale context produces errors as confidently as fresh context, making detection harder than with prompt-based approaches where failures are immediate and obvious.

Building Context-Aware AI Systems: From Experimentation to Production

Pilot projects succeed when scope stays narrow. Production deployments fail when organizations skip infrastructure planning. The gap between extracting data from 100 test invoices and processing 100,000 monthly documents across departments isn't prompt refinement, it's context architecture.

Extend provides the production-grade foundation for that leap. Its cloud-native platform unifies parsing, extraction, classification, and validation into a cohesive document intelligence stack built for persistent context. Teams move from isolated pilots to scalable, governed workflows without reinventing pipelines for every document type.

Start with Measurable Workflows

Extend’s pre-built processors and APIs let teams stand up invoice, contract, or claims automation in days, not months. Each workflow starts with measurable accuracy targets, confidence scoring, and benchmarking tools that reveal real-world ROI before full rollout.

Build Governance Early

Extend’s orchestration layer manages schema versions, rule updates, and user permissions in one place. This ensures context quality stays consistent as business rules evolve, eliminating the “prompt debt” that cripples traditional document AI initiatives.

Instrument for Observability

Through native analytics and API-level tracing, Extend tracks which context sources agents query, how long retrievals take, and when confidence falls below thresholds. Teams gain full visibility into operational context performance and model reliability.

Scale Quality Through Human Review

Extend’s built-in Human-in-the-Loop interface routes low-confidence results to reviewers, captures corrections, and feeds validated data directly back into context repositories.

Start with Measurable Workflows

Extend’s pre-built processors and APIs let teams stand up invoice, contract, or claims automation in days, not months. Each workflow starts with measurable accuracy targets, confidence scoring, and benchmarking tools that reveal real-world ROI before full rollout.

Build Governance Early

Extend’s orchestration layer manages schema versions, rule updates, and user permissions in one place. This ensures context quality stays consistent as business rules evolve, eliminating the “prompt debt” that cripples traditional document AI initiatives.

Final thoughts on the evolution of AI system design

Prompt engineering may have fueled the chatbot era, but context engineering drives the intelligent, autonomous systems now transforming enterprise operations. From invoice approvals to contract analysis, AI agents need persistent situational awareness, not isolated prompts, to make reliable decisions at scale. Partner with Extend to architect context-driven workflows that evolve with your business, not against it.

Extend goes beyond simple document conversion, offering a full-stack document intelligence system that handles parsing, extraction, evaluation, and optimization in one unified system

FAQs

What is context engineering in AI?

Context engineering structures the operational environment where AI systems work by providing persistent access to business rules, document schemas, validation logic, and historical decisions. Unlike prompt engineering which treats each interaction as isolated, context engineering loads your company's knowledge, vendor databases, approval hierarchies, processing patterns, that remains active across all AI interactions.

How does context engineering improve document processing accuracy?

Context engineering validates extractions against your operational reality, checking invoices against purchase orders, confirming vendor approval status, comparing amounts to historical patterns, and applying your specific GL codes. This situational awareness catches errors that pure extraction misses, delivering production-ready automation instead of just pulling data from PDFs.

How long does it take to implement context engineering for document workflows?

Pilot projects with narrow scope (single document type, one department) typically take 2-4 weeks to validate. Production deployment across multiple workflows could take longer to build proper infrastructure: knowledge repositories, state management, dynamic context injection, and feedback loops. Organizations that skip infrastructure planning fail when scaling beyond initial tests.